Mataigne, S., Mathe, J., Sanborn, S., Hillar, C., Miolane, N.

Abstract

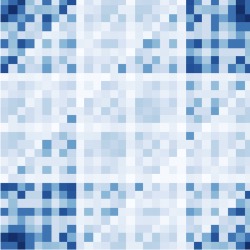

An important problem in signal processing and deep learning is to achieve invari- ance to nuisance factors not relevant for the task. Since many of these factors are describable as the action of a group G (e.g. rotations, translations, scalings), we want methods to be G-invariant. The G-Bispectrum extracts every character- istic of a given signal up to group action: for example, the shape of an object in an image, but not its orientation. Consequently, the G-Bispectrum has been incorporated into deep neural network architectures as a computational primitive for G-invariance—akin to a pooling mechanism, but with greater selectivity and robustness. However, the computational cost of the G-Bispectrum (O(|G|2), with |G| the size of the group) has limited its widespread adoption. Here, we show that the G-Bispectrum computation contains redundancies that can be reduced into a selective G-Bispectrum with O(|G|) complexity. We prove desirable mathematical properties of the selective G-Bispectrum and demonstrate how its integration in neu- ral networks enhances accuracy and robustness compared to traditional approaches, while enjoying considerable speeds-up compared to the full G-Bispectrum.

Citation

Mataigne, S., Mathe, J., Sanborn, S., Hillar, C., Miolane, N. (The Selective G-Bispectrum and its Inversion: Applications to G-Invariant Networks. NeurIPS

BibTeX

@article{mataigne2024selective,

title={The Selective G-Bispectrum and its Inversion: Applications to G-Invariant Networks},

author={Mataigne and Mathe and Sanborn and Hillar and Miolane},

journal={Advances in Neural Information Processing Systems},

volume={37},

year={2024},

}