Haputhanthri, U., Storan, L., Jiang, Y., Raheja, T., Shai, A., Akengin, O., Miolane, N., Schnitzer, M. J., Dinc, F., & Tanaka, H

Abstract

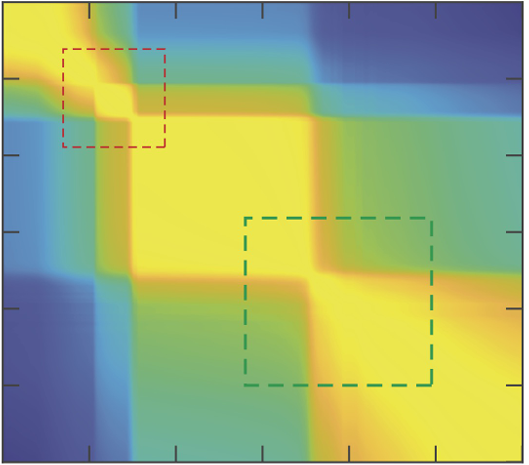

Training recurrent neural networks (RNNs) is a high-dimensional process that requires updating numerous parameters. Therefore, it is often difficult to pinpoint the underlying learning mechanisms. To address this challenge, we propose to gain mechanistic insights into the phenomenon of \emph{abrupt learning} by studying RNNs trained to perform diverse short-term memory tasks. In these tasks, RNN training begins with an initial search phase. Following a long period of plateau in accuracy, the values of the loss function suddenly drop, indicating abrupt learning. Analyzing the neural computation performed by these RNNs reveals geometric restructuring (GR) in their phase spaces prior to the drop. To promote these GR events, we introduce a temporal consistency regularization that accelerates (bioplausible) training, facilitates attractor formation, and enables efficient learning in strongly connected networks. Our findings offer testable predictions for neuroscientists and emphasize the need for goal-agnostic secondary mechanisms to facilitate learning in biological and artificial networks.

Citation

Haputhanthri, U., Storan, L., Jiang, Y., Raheja, T., Shai, A., Akengin, O., Miolane, N., Schnitzer, M. J., Dinc, F., & Tanaka, H. (2025). Understanding and controlling the geometry of memory organization in RNNs.

BibTeX

@article{haputhanthri2025understanding,

title={Understanding and controlling the geometry of memory organization in RNNs},

author={Haputhanthri and Storan and Jiang and Raheja and Shai and Akengin and Miolane and Schnitzer and Dinc and Tanaka},

journal={arXiv preprint arXiv:2502.07256},

year={2025}

}