Pub Venue

Submitted to NeurIPS

Bibliography

Kunin, D., Luca Marchetti, G., Chen, F., Karkada, D., B. Simon, J., R. DeWeese, M., Ganguli, S., Miolane, N.

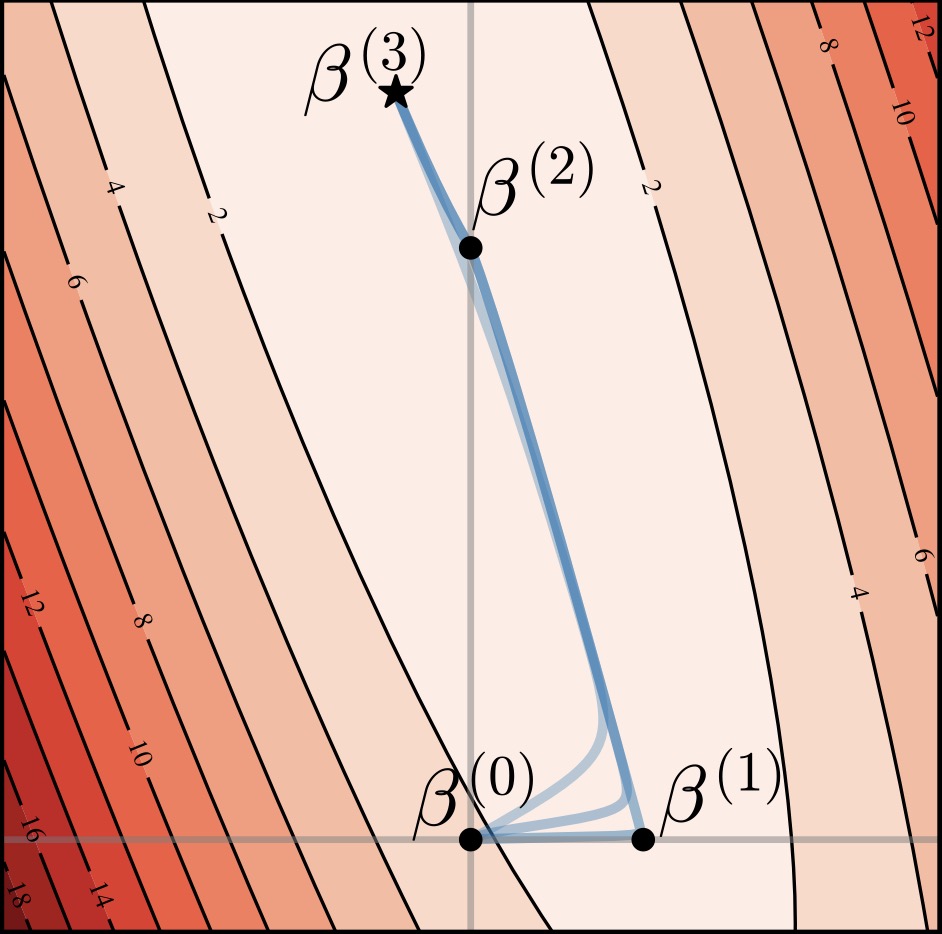

This paper introduces Alternating Gradient Flows (AGF), a framework that models feature learning in two-layer networks trained from small initialization as an alternating process of neuron activation and loss minimization. AGF explains the timing and structure of loss drops during training, unifying prior analyses and revealing that networks learn features like principal components or Fourier modes in a predictable order.

Thumbnail

Publication Year

Read More Link